The Invisible Layer: How AI Mediates Your Day Without You Noticing

You Woke Up in a System

Before you opened your eyes, AI was already at work.

Your phone’s alarm adjusted to your calendar. Your smart thermostat recalibrated based on predicted outdoor temperatures. Your morning playlist was nudged by a recommender system. As you scrolled through the news, spam filters, engagement algorithms, and large language models quietly determined what you saw, what you didn’t, and what you might care about next.

You didn’t ask for any of this. But you didn’t exactly opt out either.

We don’t live with AI — we live through it. It has become an invisible interface between us and the world: ambient, automatic, always on. And because it's invisible, it's hard to question.

This essay is a map of that layer. Not a warning — but a reckoning. Because to live ethically with technology, we first have to learn to see it.

1. What Counts as “AI” in Everyday Life?

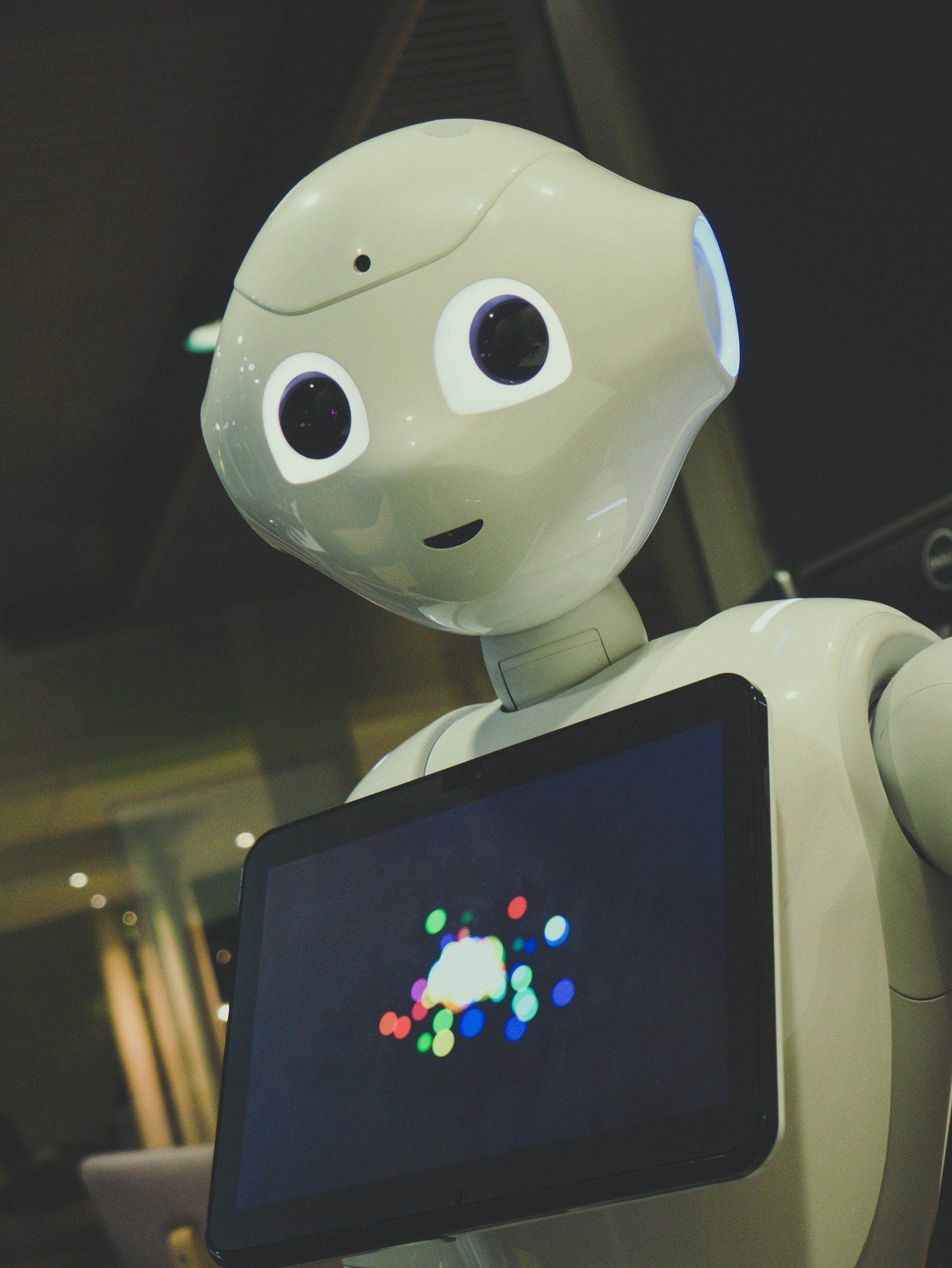

AI isn’t always dramatic. It's not the robot barista or the chatty assistant on your phone. More often, it’s behind-the-scenes code that makes something feel seamless. When you navigate traffic with Waze or Google Maps, for instance, you’re riding the product of predictive modeling and real-time routing optimization. When Gmail nudges “Do you want to schedule this meeting?” or when your phone guesses what photo you’re searching for — that’s AI, too.

We’ve normalized it. We’ve absorbed it. We say “tech” or “smart” when we mean “trained on your data, optimized to predict.”

And that normalization makes it difficult to question how it works — or whether it should.

2. Where the Layer Lives

Search engines tailor your results based on past behavior.

E-commerce platforms personalize pricing, recommendations, even delivery dates based on predictive models.

Streaming services guide your attention with learned rhythms: the intro skip, the autoplay delay, the thumbnail preview.

Smart devices (like vacuums, thermostats, fridges) make micro-decisions based on machine-learned routines.

Camera apps use AI to enhance images in real time — smoothing skin, correcting exposure, choosing which faces to focus on.

We no longer choose these interactions. They scaffold our routines. They anticipate, simplify, suggest — often helpfully. But always subtly.

3. Why This Matters: The Ethics of Frictionlessness

The invisible layer is designed to remove friction. That’s the pitch: less time thinking, more time doing. But friction, discomfort, and delay are often how we notice when something is off.

When algorithms remove friction, they also remove resistance — the kind that invites reflection.

You don’t notice that your feed is repeating itself. That the product you were recommended is more expensive than last week. That the “instant” response you got came from a model trained on millions of unpaid human inputs.

And when you don’t notice, you don’t ask. You don’t challenge. You don’t choose differently.

4. Reclaiming Attention: Learning to See the Layer

Living ethically in AI-mediated systems doesn’t require unplugging. It just requires awareness. A kind of digital literacy that starts not with code, but with curiosity.

Try this:

Ask why something was recommended.

Notice patterns in your auto-complete.

Observe which apps guess your next move — and how well they do it.

Turn off personalization for a day. See what changes.

Small acts of awareness make the layer visible. And once it's visible, it becomes designable. Disagreeable. Optional.

Conclusion: Ambient Systems, Active Choices

We may not choose to live with AI. But we can choose how we live with it.

The invisible layer won’t disappear. It will only become more embedded, more anticipatory, more emotionally intelligent. So the work isn’t just technical — it’s cognitive. Psychological. Cultural.

If we want AI systems that serve human values, we must learn to notice the systems that already shape them.

The future doesn’t begin when robots arrive. It begins every morning, when the layer wakes up before we do.

References and Resources

The following sources inform the ethical, legal, and technical guidance shared throughout The Daisy-Chain:

U.S. Copyright Office: Policy on AI and Human Authorship

Official guidance on copyright eligibility for AI-generated works.

UNESCO: AI Ethics Guidelines

Global framework for responsible and inclusive use of artificial intelligence.

Partnership on AI

Research and recommendations on fair, transparent AI development and use.

OECD AI Principles

International standards for trustworthy AI.

Stanford Center for Research on Foundation Models (CRFM)

Research on large-scale models, limitations, and safety concerns.

MIT Technology Review – AI Ethics Coverage

Accessible, well-sourced articles on AI use, bias, and real-world impact.

OpenAI’s Usage Policies and System Card (for ChatGPT & DALL·E)

Policy information for responsible AI use in consumer tools.