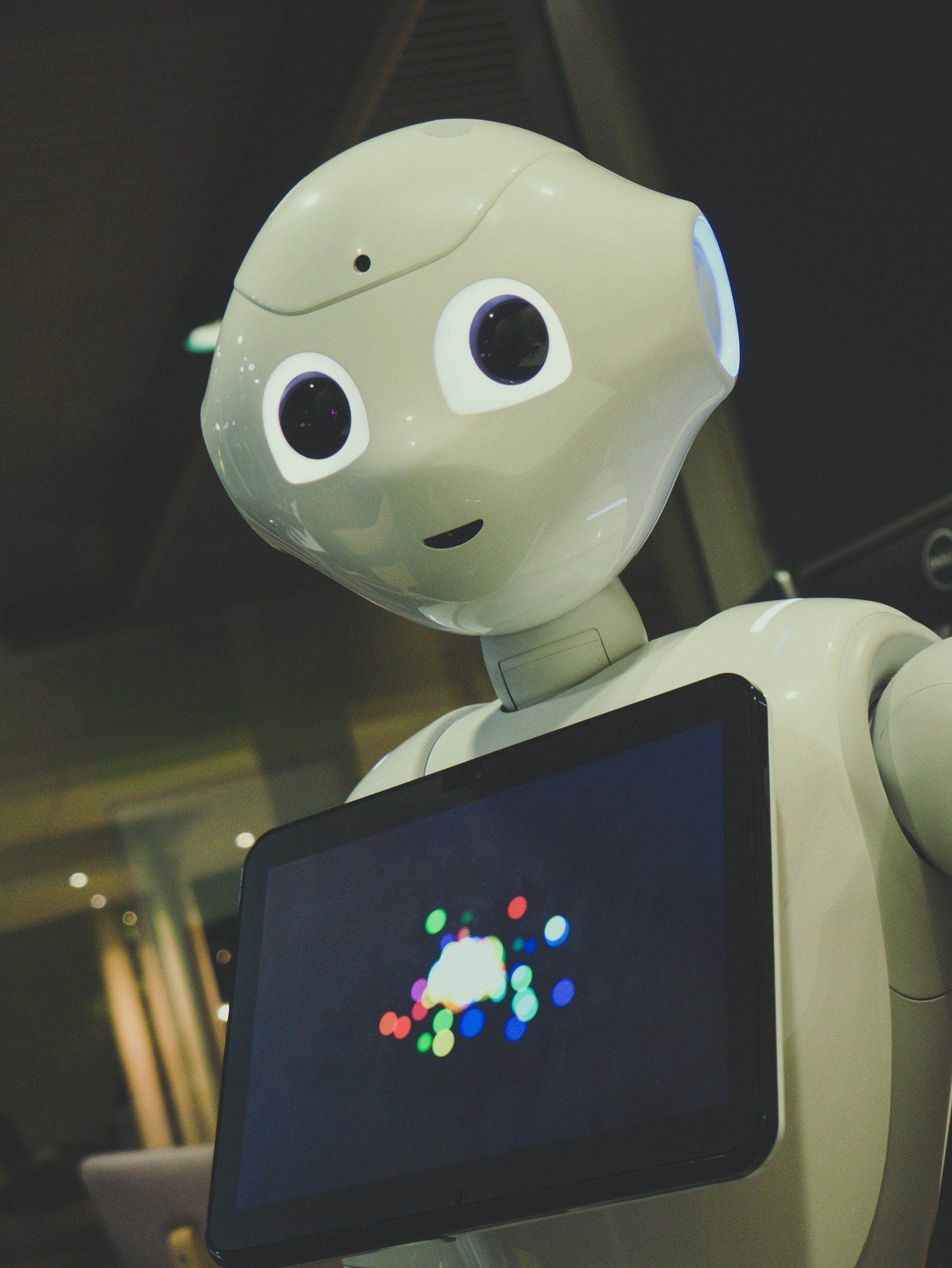

Can We Trust AI? Examining the Role of Context, Transparency, and Agency in Human-AI Relationships

As artificial intelligence becomes a part of everyday life—from recommending movies to helping diagnose diseases—a critical question arises: can we trust it? Trust is foundational to any relationship, including those we form with machines. But what does it mean to trust AI, and how can designers, developers, and users foster trust in intelligent systems? This article explores the dynamics of trust and AI, focusing on the importance of context, agency, and transparency in building ethical and effective human-AI interactions.

Defining Trust in AI Systems

Trust in AI refers to the degree of confidence that users place in a system’s reliability, safety, and fairness. Unlike human trust, which is often emotional or intuitive, trust in AI tends to be more analytical. Users must decide whether an AI system will behave as expected, make accurate decisions, and act in their best interest.

Building trust requires a combination of factors:

Consistency: Does the AI perform reliably across tasks and conditions?

Competence: Is the AI accurate and capable within its domain?

Integrity: Does the AI operate transparently and ethically?

Context-awareness: Can the AI adapt its behavior based on situational factors?

The Importance of Context

Context shapes how humans interpret and evaluate AI behavior. A recommendation system that works well in one cultural or social setting may perform poorly in another. Similarly, users are more likely to trust AI that demonstrates situational awareness.

Contextual AI systems understand not just data, but the circumstances around data. For example, a medical AI must consider not only symptoms but also patient history, geography, and social determinants of health. Context enables more nuanced, ethical, and relevant decision-making.

AI Agency and Perceived Control

Agency in AI refers to its ability to make decisions or take actions without direct human input. While agency increases the utility of AI systems, it can also raise concerns about control and unpredictability.

Users tend to trust systems that balance autonomy with control. For example, a self-driving car that allows human override in critical moments can foster greater user confidence. Similarly, AI assistants that explain their reasoning and allow users to approve or reject actions are perceived as more trustworthy.

Transparency and Explainability

One of the most effective ways to build trust in AI is through transparency. Explainable AI (XAI) allows users to understand why and how a decision was made, which is essential for informed consent and accountability.

Transparency involves:

Clear communication: Explain the purpose and limitations of the AI.

Decision rationale: Show how outcomes were determined.

Data provenance: Disclose where training data came from and how it was handled.

Opaque "black-box" AI systems, by contrast, often erode trust, especially when decisions have high-stakes consequences.

Ethical Design and Human-Centric AI

Ethical AI design puts human values at the forefront. Trust is not just a technical challenge, but a moral one. Developers should consider who the AI serves, how power is distributed, and what long-term impacts might arise.

Best practices include:

User involvement: Engage users in design and testing.

Bias mitigation: Identify and correct discriminatory patterns in training data.

Consent mechanisms: Allow users to opt in or out of data collection and automated decisions.

Feedback loops: Create ways for users to report errors or challenge decisions.

Trust as a Two-Way Street

Just as users must decide whether to trust AI, AI systems should also be designed to trust human input. Systems that learn from user behavior, incorporate feedback, and ask for clarification when uncertain can improve both accuracy and engagement.

This mutual trust leads to more effective collaboration. In high-stakes environments like healthcare or aviation, human-AI teaming is most successful when both parties respect each other's strengths and limitations.

Conclusion

Trust in artificial intelligence is not automatic—it must be earned. Contextual understanding, agency balance, and transparent design are all essential to fostering ethical and effective relationships between humans and AI systems. As we integrate intelligent machines more deeply into our lives, building trust will determine not just adoption, but the integrity and impact of the technology itself. By designing AI that is explainable, context-aware, and aligned with human values, we can ensure that trust becomes a cornerstone of the AI era.